Are the ‘Twitter Files’ a Nothingburger?

There are real concerns about moderation, bias, and transparency—but the polemics are veering into ugly conspiracism.

THE “TWITTER FILES” SAGA, in which a few journalists handpicked by Elon Musk have been posting selected bits of information about Twitter’s pre-Musk operations, is now entering its second week and taking the cacophony of predictable ideological polemics to new highs, or rather new lows. It has also been subsumed in the bigger drama of Musk’s war with the “legacy” Twitter leadership, which includes the abrupt dissolution of Twitter’s Trust and Safety Council moments before it was to hold its first Musk-era meeting on Zoom. And the whole story has now devolved into QAnon- and Pizzagate-style pedophilia accusations abetted and instigated by Twitter’s new boss.

This ugly turn in the story is appalling for many reasons, not least because the issue the Twitter Files disclosures purport to address—inconsistent, non-transparent, and politically skewed management of social media platforms that are important venues for political speech—is a real problem. But Musk’s culture-war wrecking ball approach is making things far worse.

THE TWITTER FILES have been billed as a major exposé of left-wing Twitter censorship. Musk, whose $44 billion acquisition of Twitter was ostensibly motivated by grievances against such speech-policing—and, in a larger context, by his spats with progressive journalists—has presided over the release of selections from internal documents made available to three writers on Substack: Matt Taibbi, a left-wing gadfly reporter; Bari Weiss, a center-right former New York Times staff editor; and Michael Shellenberger, a maverick environmental advocate and two-time California gubernatorial candidate. (There was a flurry of amusement when the Washington Post referred to Weiss and Taibbi as “conservative journalists,” then removed the label in an update without specifying what the update was. The label is certainly inaccurate, but it’s fair to say that all three writers belong to a political subculture oppositional to the progressive consensus.) But do these “files”—screenshots of Slack messages and other documents showing how decisions were made to remove tweets, suspend accounts, or limit the visibility of some posts and users—show nefarious political suppression of free speech or legitimate and even necessary moderation?

Broadly, the reactions are divided into two camps: those who believe the revelations are a major scandal vindicating the worst conservative/populist accusations against “Big Tech,” and those who believe that the revelations are a “nothingburger” dressed up to look like a major scandal. Let’s call them Team Scandal and Team Nothingburger.

Here’s where Team Scandal has a point, however self-serving. Ever since Twitter pivoted from relatively untrammeled speech to increasingly intrusive moderation, the lack of clear rules and transparency—coupled with ideological slant—has been a problem. (For the record, I have been writing about this since 2015.) Numerous people have been suspended or permanently banned for vague and elusive reasons. Yes, many of them were people with odious opinions, such as far-right blogger Robert Stacy McCain, whose off-Twitter history includes Confederacy apologetics, defenses of “natural revulsion” toward interracial marriage, and anti-gay rants. But bad people are not an excuse for bad policies. When McCain was banned from Twitter in early 2016 for unspecified “targeted abuse,” even some people who stressed their loathing for him, such as “Popehat” blogger Ken White, saw this as troubling evidence of arbitrary and biased enforcement. (White, an attorney, insisted that no First Amendment issues are at stake since private corporations have a legal right to run their platforms any way they see fit but argued that arbitrary suspensions and expansive definitions of abuse are “bad business.”)

In other cases, as the Twitter Files releases confirm—and as not only the right-wing press but mainstream media outlets have reported before—steps were taken to reduce some posters’ visibility and reach without informing the targeted individuals or allowing them to appeal. The measures could include algorithmic “deboosting” which made the user’s tweets less likely to show up in followers’ feeds and blocking the user’s tweets from trending or showing up in searches.

The polemics over the Twitter Files have often focused on whether Twitter executives lied when they said in 2018 that they “do not shadow ban,” and most certainly not “based on political viewpoints or ideology.” To some extent, this argument boils down to a disagreement over terminology. People who use the term “shadow banning” loosely to mean lowering the visibility of a person’s tweets claim that the execs lied, because they did just that. But Twitter officials talked openly about the distinction they drew between shadow banning, which the company defined strictly as making a person’s tweets completely invisible to anyone but the user, and other practices it put under the heading of “visibility filtering.” The same Twitter executives who denied they shadow banned users readily conceded that they “rank” tweets and downrank ones from “bad-faith actors who intend to manipulate or divide the conversation.”

Fair enough; but this honest admission should still be worrying. Who gets to classify someone as a “bad-faith actor”? What constitutes manipulation and divisiveness as opposed to “healthy” polemics and exchanges?

And “based on political viewpoints or ideology” is also not as simple as it seems at first glance, since many people don’t see their political biases as biases. Thus, content moderators who allow self-proclaimed anti-fascists to get away with much more violent language than MAGA activists, or who allow progressives to attack conservative women or minorities with sexual or racial slurs that would not be tolerated from right-wingers, may sincerely believe that it’s not about politics but about good guys vs. bad guys. For the same reason, an online dogpile that includes the release of personal information (“doxxing”) and attempts to get someone fired may be rightly seen as harassment if the target is, say, a parent who brings a child to Drag Queen Story Hour, but not if it’s a “Karen” dubiously accused of racist behavior (such as calling a non-police parking hotline to report an illegally parked car whose owners turn out to be black).

Indeed, then-Twitter CEO Jack Dorsey openly discussed this issue in 2018 even as he denied that Twitter was shadow banning Republicans or making decisions based on ideology. In an August 2018 interview, Dorsey told CNN’s Brian Stelter:

We need to constantly show that we are not adding our own bias, which I fully admit is left, is more left-leaning. And I think it’s important to articulate our bias, and to share it with people so that people understand us, but we need to remove all bias from how we act, and our policies, and our enforcement.

Dorsey’s commitment to fairness seems genuine. But this dedication to neutrality has, over the years, inevitably come into conflict with the pro-“social justice” climate in the major tech companies, including Twitter. It is notable, for instance, that when Wired magazine hosted a roundtable discussion on solving online harassment in late 2015 featuring Del Harvey, Twitter’s vice president of trust and safety, in conversation with several “experts” on the issue, every expert was a progressive activist, and Harvey herself expressed concern that tools developed to curb online abusers could be turned around against “marginalized group[s].” Likewise, anti-abuse initiatives at Twitter and other tech companies have been heavily dominated by activists who tended to support not only broad restrictions on “oppressive” speech in the name of “safety” but double standards favoring “marginalized” identities over the “privileged.” When Twitter announced the creation of its Trust and Safety Council in February 2016, Ken White, generally skeptical of right-wing grievances about social media tyranny, nonetheless wrote that the council “appears calculated to have a narrow view of legitimate speech and a broad view of ‘harassment’ (at least insofar as it is uttered by the wrong people).”

(In an ironic twist, White announced his departure from Musk-era Twitter hours before the Trust and Safety Council bit the dust.)

And yet ultimately, evidence of systematic political bias in Twitter’s enforcement of its rules remains sketchy at best, and the Twitter Files have not yet changed that. Caroline Orr Bueno, a behavioral scientist and disinformation specialist whose politics can be fairly described as left, had disputed Musk’s claim of pro-left, anti-right bias by pointing out that her own account was for two years banished to semi-invisibility limbo for a bad reason.

Of course, with left-wing as with right-wing claims, the plural of “anecdote” is not “data,” and perhaps Orr’s anecdote doesn’t prove what she thinks it does, since she mentions that her situation was ultimately “fixed” with the help of a Twitter employee.

Because of unreliable information and subjective interpretations, the question of social media bias easily lends itself to endless and ultimately unproductive polemics. In 2020, were social media giants correct in trying to limit the reach of content that was classified as election-related or COVID-related misinformation? Should potentially explosive erroneous or misleading information on such issues as police brutality (e.g., claims that Columbus, Ohio police “murdered” Makhiya Bryant, a black teenager shot while trying to stab another young woman) have been treated the same way? Do Twitter rules against “misgendering” and “deadnaming” transgender-identified people protect a vulnerable minority from vicious harassment or curb legitimate debate in an area where mainstream cultural consensus is still in flux, especially when bans and suspensions target not only hostile speech directed at trans individuals but general commentary about gender identity?

In the end, I think it’s hard to disagree with David French when he writes:

The picture that emerges is of a company that simply could not create and maintain clear, coherent, and consistent standards to restrict or manage allegedly harmful speech on its platform. Moreover, it’s plain that Twitter’s moderation czars existed within an ideological monoculture that made them far more tolerant toward the excesses of their own allies.

French believes that, as a platform that claims to be “a place that welcomes all Americans,” Twitter should model its moderation policies on the principles we associate with the First Amendment: banning threats, targeted harassment, defamation, and invasions of privacy but otherwise remaining strictly viewpoint-neutral. There’s a case to be made for private platforms restricting—either through bans or through visibility filters—fringe speech that, while protected by the First Amendment, is rightly kept outside the mainstream in the offline world, such as explicit racial or sex-based hatred or advocacy of violence that does not rise to the level of immediate incitement. But at least within a generous Overton window, viewpoint neutrality should be the norm, and it’s one from which pre-Musk Twitter frequently if not routinely strayed.

AND NOW A WORD for Team Nothingburger.

For one thing: yes, “shadow banning” in the sense of “visibility filtering” was far more openly acknowledged in mainstream venues than the right is claiming today. The January 1, 2020 update of the Twitter terms of service says that the platform “may . . . limit distribution or visibility of any Content on the service.” Some critics have argued that the July 26, 2018 blog post by Twitter executives denying “shadow banning” is deceptive because of the very narrow definition it uses. But how can a post which openly states that Twitter can deliberately make certain tweets very hard to find be considered “deceptive”? Likewise, the supposed wall of media silence about shadow banning is contradicted by some of the very articles that make this critique.

Thus, a new National Review piece which skewers shadow-banning deniers also mentions that the issue first gained traction in July 2018 after Vice News reported that “a number of Republican politicians’ accounts were not appearing in suggested searches on the site when their names were typed in.” And one can easily find other articles in mainstream, center-left outlets discussing the apparent problem of content suppression by Big Tech. Last April, the Atlantic ran a piece by Gabriel Nicholas, a research fellow at the Center for Democracy and Technology, titled “Shadowbanning Is Big Tech’s Big Problem.” Nicholas argued that content filtration or suppression was sometimes a “necessary evil” but advocated maximum openness to minimize its harmful effects, including the erosion of trust in social media moderation.

There’s also the question of exactly what the Twitter Files show. Much of the information that has been presented so far has been divorced from any useful context. For instance:

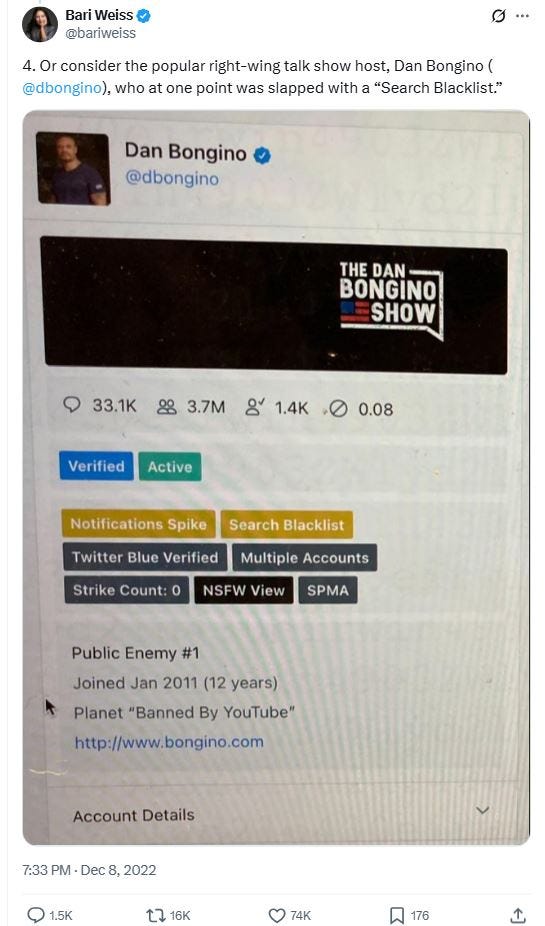

So Dan Bongino, a zealous “Stop the Steal” propagandist and practically a caricature of the Trumpist “conservative” (“My entire life right now is about owning the libs”), got hit with a search ban “at one point.” When? For how long? For what offense? Crickets. Nor do we know why or for how long Twitter de-amplified Turning Point USA president Charlie Kirk—who is, shall we say, not exactly silenced. As for the ban on Stanford professor of medicine and prominent COVID-19 contrarian Dr. Jay Bhattacharya, it was presumably related to what Twitter classified as COVID misinformation: Bhattacharya has been a vocal critic of lockdowns, masking and vaccine mandates. Let’s leave aside for now the question of whether he is a brave truth-telling dissenter or a dangerously wrong COVID quack. The more salient fact for the purposes of the Twitter Files is that the company’s policy of seeking to limit the reach of content which bucked consensus science on COVID should surprise exactly no one: in this case, at least, Twitter was quite transparent.

In instances when the Twitter Files do supply context, the revelations often turn out to be far less damning than they’re made out to be. For instance, an examination of Twitter “czars’” handling of tweets alleging election-rigging or election theft plots in the weeks before the 2020 election suggests that, despite their own political biases, they were making a good-faith effort to be fair. Some posts from Democrats fanning hysteria about election-stealing via U.S. Post Office shenanigans were allowed to stay up with no warning labels; but so was a Donald Trump tweet crying “A Rigged Election!” over news of 50,000 Ohio voters getting “wrong absentee ballots.” Some Twitter staffers thought “Rigged Election” warranted a warning; Twitter’s then-head of Trust and Safety, Yoel Roth, overruled them because the underlying claim about misdelivered absentee ballots was factual. (It’s also far from clear, by the way, that suppressing Trump’s pre-election outbursts about vote-rigging hurt Republicans at the ballot box.)

SO IS THERE EVIDENCE of political bias or censorship in the Twitter Files? The generally skeptical Eric Levitz, in New York magazine, sees probable unfair enforcement in the treatment of Chaya Raichik of “Libs of TikTok” fame: Raichik was repeatedly suspended for “hateful conduct” policy violations but a tweet disclosing her home address was allowed to stay up. And Levitz omits another detail: an internal document acknowledged that none of Raichik’s tweets were “explicitly violative,” but justified the suspensions on the ground that her content, which often denounces “gender-affirming healthcare” for transgender-identified minors, leads to harassment of LBGT people as well as medical personnel. Again, you can think that “Libs of TikTok” is a terrible account and that Raichik, who has called for the blacklisting of all openly gay teachers, is a bigot; but punishing Twitter users for the actions of people who read their tweets is a slippery slope.

You could say that one case of apparently clear-cut ideological favoritism is slim pickings. Even so, the question of Twitter enforcement bias (slanted left pre-Musk, possibly slanted right under Musk) is a story worth exploring. At least as long as Twitter positions itself as a politically neutral universal-access platform, such bias is a valid issue, and so is non-transparency. But the release of selected tidbits by a small group of journalists from (broadly) a single media subculture is itself rife with bias and non-transparency; in the end, we’re still in the dark.

To take just one example: Stephen Elliott, a left-wing writer who was targeted in the “Shitty Media Men” list in the early days of #MeToo and is now suing the list’s creator, is certain that his account was being “throttled” pre-Musk so that followers who used the “top tweets” rather than “latest tweets” setting rarely saw his posts. Elliott, who says that he has seen a dramatic spike in engagement from followers since the Musk takeover, is convinced that he was disfavored under the ”old regime” because of his lawsuit. The change in engagement is unprovable because Elliott frequently mass-deletes his old tweets, and it’s possible that his increased visibility is due to a couple of recent articles that mention his case. But is it possible that some Twitter progressives who saw him as an anti-#MeToo figure were flagging his account, resulting in tacit strikes that were recently removed? Sure—and if so, that would be a definite abuse of power. But the intrepid investigators of the Twitter Files don’t appear to have any interest in such stories; Elliott told me on Tuesday that he has reached out to both Weiss and Taibbi to see if he was on any blacklists but received no response.

OVER THE WEEKEND, the Twitter/Musk debacle went into overdrive as Musk escalated his trolling with a sneering call for the prosecution of Anthony Fauci and began to drop innuendo that Yoel Roth—who had left Twitter after an initially amicable relationship with the new boss—was either a pedophile or a “groomer” promoting child sexualization. Meanwhile, the Twitter Files chronicled Twitter’s internal flailing about how to handle Donald Trump after the January 6th riot and suggested that he was unjustly banned even though his tweets that day technically did not violate any rules. Together, these threads activated the true fever swamp of the online right: the January 6th apologists and the pedophilia-obsessed QAnon crowd. Even Pizzagaters from six years ago have resurfaced, latching on to the false rumor that former Twitter Trust and Safety Council member Lesley Podesta, an Australian policy consultant and former government official, was the niece of John D. Podesta, the Democratic party operative whose mention of pizza in hacked emails in 2016 somehow came to be seen as a code word for a child sex ring.

Is Musk instigating a dive into far-right Trumpian conspiracy theory? It is undeniable that a massive portion of the Twitter Files flirts with January 6th apologism by suggesting that (1) yes, the election was “rigged” or “stolen” because of Twitter “interference” (Musk’s disclaimer that he believes Trump would have lost anyway does not nullify the implication that the process was corrupted); and (2) it was absolutely outrageous for Twitter to treat the violent invasion of the U.S. Capitol by Trump supporters seeking to overturn the election and egged on by the president and his cronies as an event that warranted extraordinary measures.

The second element of this toxic brew is the QAnon bait that Musk—aided by a number of alt-right Twitter figures—has been flinging about with his “pedo guy” insinuations against Roth. It’s plain that Roth’s history of inflammatory posts, such as tweets referring to the Trump administration as “ACTUAL NAZIS IN THE WHITE HOUSE” and to Trump strategist Kellyanne Conway as “Joseph Goebbels,” made him a poor fit for a high-level moderation post on a platform like Twitter. But the pedophile innuendo, based on a misinterpreted passage from Roth’s 2016 dissertation which examines data from the Grindr gay dating network—Roth argues that since minors already use Grindr, the service should create a protected area for teenagers who want to connect with other gay teens, with lewd and hook up-oriented content filtered out—has been truly vile. Among other things, these attacks also engage in the very same “offense archeology” for which many conservatives have rightly criticized the “woke left.” The Musk mob has unearthed a 2012 tweet in which Roth jokes about sounds next door that could be either a crying infant or loud sex, as well as a 2010 link to a Salon article asking if high school students can ever meaningfully consent to sex with teachers. (The article specifically discusses cases of legally adult students.) The Washington Post reports that Roth has left his home out of safety concerns.

At this point, Musk’s use of Twitter to galvanize the craziest elements of the MAGA far right and to sic them on “enemies” from Twitter’s “old regime” overshadows any positive value of his arguably positive pro-transparency moves, such as the announcement of a software update that will allow users to see whatever restrictions have been placed on their account, and anything of interest his disclosures may offer. The Musk/Twitter saga has crossed the line into shocking ugliness, and any journalists assisting in it are tainted by the association.